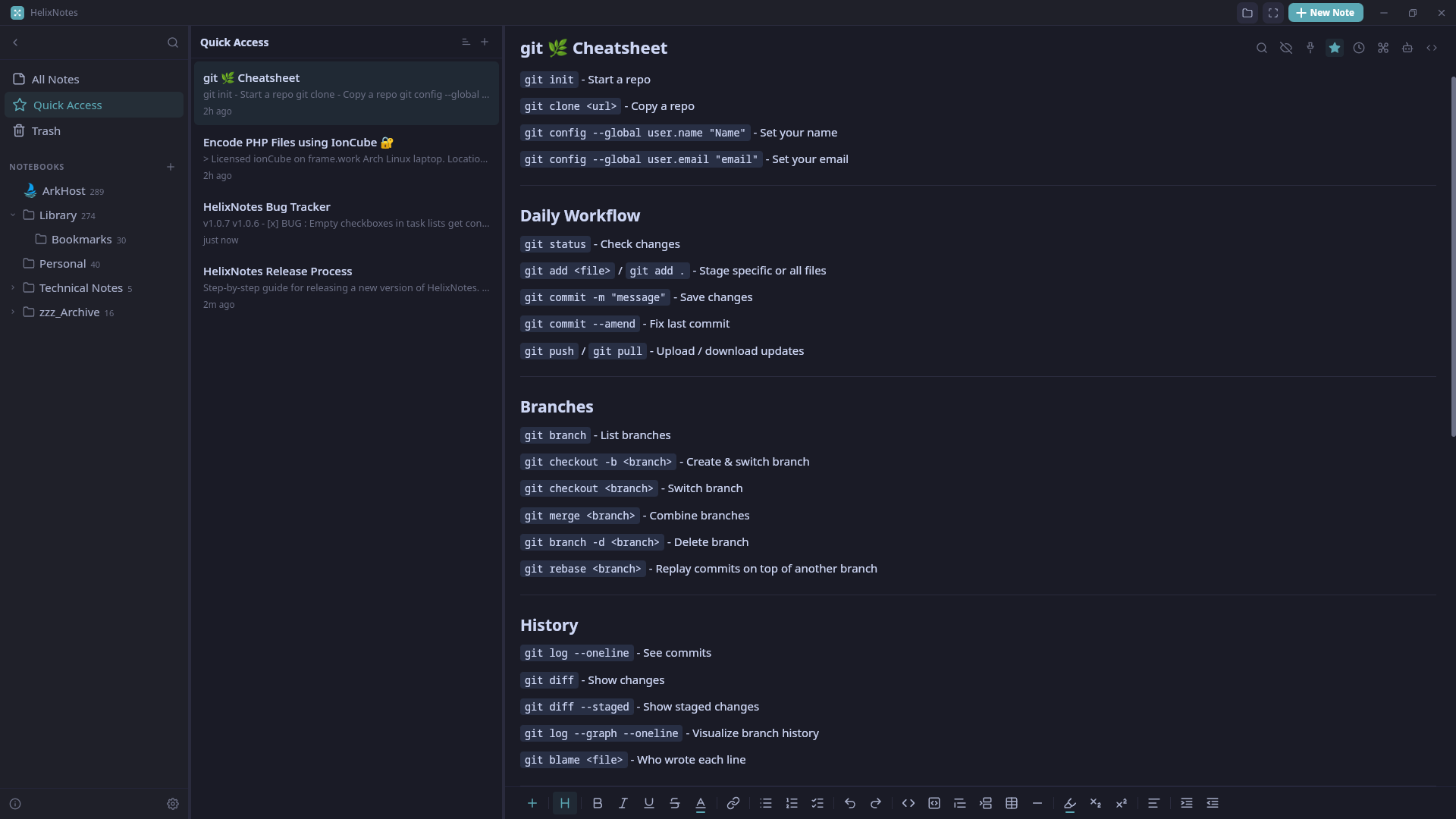

I built a note-taking app because the one I wanted didn’t exist. Clean UI, local .md files, no cloud, no account.

Built with Rust + Tauri 2.0 + SvelteKit. Full-text search powered by Tantivy. Graph view, AI writing tools (bring your own key), Obsidian import, version history.

Available for Linux (AppImage, APT, AUR), Windows, and macOS. Source: https://codeberg.org/ArkHost/HelixNotes

Not at this moment. Which local model would you like to see as an additional option?

I don’t know what is typical, but when I use AI locally I’ve been running llama-cpp with models grabbed from HF (ex. QwenCoder). Then in my VS code plugin (RooCode) I use the “OpenAI compatible” option to point it at my local server.

Not sure how hard that is to get working, but my hope is that “OpenAI Compatible” helps.

Ollama, lmstudio, llama.Cpp